In 2026, sales enablement has become a fundamental part of how most sales teams operate. Sales enablement metrics are now essential because enablement is no longer judged by activity but by outcomes.

Enablement has always provided critical support to sales teams, helping reps get sharper across stages and sales cycles. But planning and execution by themselves don’t guarantee impact. If enablement is the workout plan, sales enablement metrics are the before-and-after photo. The real test is whether reps are selling differently and whether that shift shows up in measurable results.

Today, nearly 90% of companies with sales teams have a dedicated enablement role or program. So the question isn’t, “Should we do more enablement?” It’s “Are reps selling differently, and can we see it in the numbers?”

Most months play out the same way. You start with a plan, the quarter moves along, and then the cracks show up. A few deals go quiet, early-stage momentum dips, and the forecast starts feeling heavier than it should. When that happens, training becomes the easy answer, because it’s the one lever everyone knows how to pull.

The problem is, training only helps when you’re clear on what’s broken. If the real issue is that reps aren’t adopting the playbook, the message isn’t sticking, or coaching isn’t translating into behavior, another session won’t change much. It just adds more activity on top of a problem you haven’t named yet.

This guide is meant to help you name it. It’s for Enablement, RevOps, Sales Leaders, L&D, and Coaches who want to use sales enablement metrics to understand what’s actually happening in the field. We’ll cover the main metric types, how to calculate them, and how to use them to decide where to focus, what to improve, and what to drop.

What Are Sales Enablement Metrics?

They connect enablement work, like training, content, coaching, playbooks, and tools, to real outcomes such as rep behavior, pipeline progress, win rates, deal speed, and forecast health. They show what’s being adopted, what’s changing in the field, and what business impact that change is creating.

See sales enablement metrics as “early clues” that reveal what’s working and what’s not, even before a Quarterly Business Review (QBR).

- Content usage in live deals (by stage and segment)

- Skill improvement after training or coaching (pre vs post scores)

- Manager coaching coverage and consistency

- Execution adherence to playbooks and methodology

- Stage progression and stall reduction linked to enablement initiatives

These metrics help leaders catch problems early before the QBR surprises you.

The Key Difference Between Sales Enablement Metrics and KPIs

People often use ‘metrics’ and ‘KPIs’ interchangeably. They’re not the same.

Metrics are anything you measure. KPIs are the few metrics you commit to hitting, with a target and a timeline.

In simple terms: every KPI is a metric, but not every metric is a KPI.

What is a sales enablement metric?

Sales enablement metrics are systematically defined and consistently measured indicators used to evaluate the implementation, adoption, and effectiveness of sales enablement interventions.

These metrics operationalize enablement goals into observable measures of sales-rep behavior, enablement resource utilization, and intermediate performance shifts, thereby providing empirical evidence of whether enablement activities are producing intended changes within the sales system.

They answer: Did the enablement effort land, and did it shift how reps sell?

Examples:

- % of reps completing training

- Certification pass rates

- Content usage by stage or persona

- Coaching frequency and quality

- Playbook adoption

- Rep behavior change (better discovery, stronger next steps, improved talk tracks)

What is a sales enablement KPI?

Sales enablement KPIs are a crucial set of high-priority outcome measures that quantify the contribution of sales enablement to strategic sales and revenue objectives.

Unlike broader enablement metrics, KPIs represent the most decision-critical indicators of business impact. explicitly linking enablement-driven behavioral and process changes to improvements in sales performance, pipeline health, and revenue results.

A KPI is a metric you treat as “key” because it maps to a priority objective. KPIs are strategic by design. Leaders and RevOps use them as the scoreboard for key business goals.

They answer, "Did the business result improve?"

Examples:

- Win rate

- Pipeline coverage

- Conversion rates between stages

- Deal velocity or sales cycle length

- Average deal size

- Quota attainment

- Forecast accuracy

The key difference

- Enablement metrics track the enablement engine. KPIs track the business result.

- Enablement metrics are closer to the work. KPIs are the scoreboard.

- Enablement metrics are usually leading indicators. They show whether you are on track to influence results. KPIs are lagging indicators. They confirm whether results actually changed.

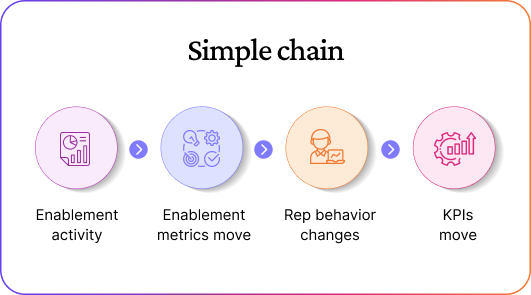

The Simple Sales Enablement KPI Flow:

Quick example

You roll out a new discovery playbook.

Enablement metrics you watch:

- Adoption of the playbook in calls

- Coaching on discovery

- Certification or skill scores

KPIs you expect to improve:

- Stage 1 to Stage 2 conversion

- Win rate

- Deal cycle length

If enablement metrics do not move first, KPIs probably will not move later.

Why enablement should measure metrics and partner on KPIs

Enablement can directly influence what reps adopt and how well they execute. That is exactly what enablement metrics show. KPIs like win rate or quota attainment are different. They are affected by many things enablement does not control, such as segment mix, lead flow, product updates, pricing changes, and market conditions.

So enablement should focus on owning the leading indicators and tracking whether behavior is changing in the field. Then partner with RevOps and Sales Leadership to connect those changes to KPI trends. Enablement supports revenue outcomes, but it should not present revenue as something it drives alone.

What Sales Enablement Metrics Actually Measure

Sales enablement metrics measure whether enablement is doing its job in the real world. Not in theory, not in slide decks. In the day-to-day of reps selling and deals moving.

Think of enablement like a set of help you give the sales team: training, playbooks, content, coaching, tools, and process changes. Enablement metrics tell you three simple things:

1. Are reps using what we gave them?

2. Are they selling differently because of it?

3. Are deals moving better in the early signals we can influence?

Below is a detailed but simple breakdown.

1. They measure adoption: did reps actually use it?

Enablement often ships something new. A training, a playbook, a new deck, a new tool, a new way to qualify deals. Adoption metrics check if reps picked it up.

What this looks like

- How many reps completed the training

- How many reps got certified

- How often a playbook or battlecard is opened

- Whether reps are using a new tool or process

Examples

- Training completion rate: 82% of reps finished the new discovery training

- Certification rate: 65% passed the pricing certification

- Content usage: enterprise reps opened the new security deck 3x more than last month

- Process adoption: 70% of deals now have the new qualification fields filled

Why this matters

If adoption is low, the problem is not selling skill yet. It is that the enablement hasn’t landed. You fix adoption first before expecting results.

2. They measure behavior change: are reps selling differently?

Adoption only tells you people touched the thing. Behavior metrics tell you whether reps changed what they do on calls, in emails, and in deals.

What this looks like

- Are reps asking better questions

- Are they using the new talk track

- Are they following the new process in live deals

- Are they handling objections the way you trained

Examples

- In call reviews, reps now cover business pain before product details

- Objection handling improved after coaching

- More reps are setting clear next steps at the end of meetings

- Reps are using the new competitor positioning in late-stage calls

Why this matters

Enablement exists to change selling behavior. If behavior stays the same, downstream business results rarely change.

3. They measure skill improvement: are reps getting better at specific skills?

Some enablement programs target a clear skill, like discovery, demo flow, negotiating, or messaging.

Skill metrics show whether reps improved in those areas.

What this looks like

- Before vs after performance in roleplays

- Manager evaluations on real calls

- Scores tied to a specific skill

Examples

- Discovery score rose from 2.8 to 3.6 after the program

- New hires hit “ready to run demos solo” in 5 weeks instead of 7

- Roleplay pass rate for objection handling increased from 50% to 75%

Why this matters

It shows whether learning is turning into ability, not just attendance.

4. They measure the quality of execution: are reps using it well?

Two teams can adopt the same playbook. One uses it well; the other uses it badly. Execution metrics separate those cases.

What this looks like

- Are reps using the right content at the right stage

- Are they applying the methodology properly

- Is deal work becoming cleaner and more consistent

Examples

- Discovery notes are more complete and structured

- Deals now show clearer buyer roles and risks earlier

- Reps are using the right deck for the right persona

- Follow-up emails are better aligned to buyer concerns

Why this matters

Enablement should not only be “used.” It should be used correctly. This is where a lot of impact is won or lost.

5. They measure early deal movement signals: are deals moving better in the places enablement can influence?

Enablement metrics can also include early pipeline signals that are close to rep behavior, not full revenue outcomes.

These are still “enablement territory” because they reflect changes reps can drive after enablement.

What this looks like

- Movement between early stages

- Better progression after key moments like discovery or demo

- Fewer deals stalling for reasons enablement targets

Examples

- After discovery training, more deals move from first meeting to qualified stage

- The number of deals stuck in stage 2 dropped

- Time between discovery and demo became shorter

- More qualified opportunities per rep after a new outbound playbook

Why this matters

These signals show whether enablement is improving the motion of deals, without claiming full business outcomes that depend on many other factors.

6. They measure enablement efficiency: is enablement running well as a function?

Enablement teams also need to know if their programs are practical and repeatable.

What this looks like

- How fast enablement rolls out

- Whether reps engage meaningfully

- Whether programs are worth doing again

Examples

- Time to launch a program

- Drop off rate in training

- Cost per certified rep

- How often content gets refreshed

- Coaching coverage per manager

Why this matters

Even good programs fail if they are slow, heavy, or hard to sustain.

Next, we’ll look at the five important types of sales enablement metrics. We'll cover what to track and how to calculate each one.

5 Types of Sales Enablement Metrics That Actually Matter

Now that we’re clear on what enablement metrics measure, the next step is making them usable. The easiest way is to group metrics by what they help you diagnose: Did it land? Is it working? Is it sticking? Are deals moving the way they should?

For each type below, we’ll cover what it shows, what to track, and how to calculate it. We’ll also discuss where the data comes from, how to segment it, how often to review it, and what actions to take.

A. Program & Adoption Metrics

1. Module completion (supporting)

- What it tells you: It shows if the training met the reps target (attendance/completion). But it doesn’t say if it changed selling behavior.

- How to calculate: % of assigned reps who completed the module within the required window.

- Where the data comes from: LMS/enablement platform (course assignments and completion logs).

- How to segment: By team, manager, role, rep tenure (new vs tenured), and cohort (join month).

- Cadence: Weekly during rollout, then monthly.

- Action it drives: If completion is low, fix rollout and manager accountability; if completion is high, validate real usage next.

Directional benchmark: For required programs, complete them strongly in the first 2–3 weeks. If it takes over a month to complete, you likely have a rollout or manager enforcement issue, not a content issue.

2. Time-to-first-use (after rollout)

- What it tells you: How fast reps begin using the new play, asset, or process. This indicates its relevance and how easy it is to adopt.

- How to calculate: Median days from launch (or assignment) to first observed usage (call/deal note/content share).

- Where the data comes from: Content analytics, CRM activity (attachments/links), and call tools or call notes.

- How to segment: Segment (SMB/Mid-Market/Enterprise), team, manager, tenure, and region.

- Cadence: Weekly for the first 4 to 6 weeks after launch.

- Action it drives: If adoption is slow, simplify packaging, clarify when to use it, and reinforce through managers.

Directional benchmark: If most reps don’t try it in the first 1–2 weeks, adoption is inconsistent. If it takes a month for the median rep to use it, the play may not be relevant or isn’t part of their workflow.

3. Application signals (used in real calls/deals)

- What it tells you: Whether training is showing up in live selling, not just in completion stats.

- How to calculate: % of reps (or % of active opportunities) with at least one verified usage in the last X days.

- Where the data comes from: Call recordings or analysis, CRM deal notes, and any tracked playbook steps.

- How to segment: Stage, segment, tenure, manager, and performance band.

- Cadence: Weekly for managers, monthly for enablement and RevOps.

- Action it drives: If completion is high but application is low, shift to practice and coaching, and revise the play if it’s not usable.

Directional benchmark: You know it’s working when the application appears quickly after rollout and grows each week. If it stays limited to a small group, like top reps, after several weeks, there’s a translation problem. This means you need more practice and coaching to connect training to real calls.

4. Talk-track/process usage (field adoption)

- What it tells you: Whether reps are using the new talk track or process consistently, not just once.

- How to calculate: % of relevant calls/deals where required talk track elements or process steps are present (within a time window).

- Where the data comes from: Call analysis, deal notes, CRM fields, and manager scorecards.

- How to segment: Manager, team, segment, stage, tenure, and optionally certified vs not certified.

- Cadence: Weekly for frontline execution, monthly for trend review.

- Action it drives: If usage is inconsistent, tighten manager coaching rhythm, add reinforcement, and remove friction from the play.

Directional benchmark: Healthy usage means consistent engagement from managers and reps over time. If usage varies by manager or declines after launch, it shows a lack of reinforcement. This means the behavior isn’t becoming standard.

B. Content & Asset Effectiveness Metrics

5. Usage in live deals

- What it tells you: Whether reps use the asset when money is on the line (not just viewing it).

- How to calculate: Deals where asset was used ÷ total active deals (for the relevant segment/stage).

- Where the data comes from: Enablement content platform analytics, CRM attachments/links, and sales email/sequence tools.

- How to segment: Segment, stage, persona, team, rep tenure.

- Cadence: Monthly (weekly during major launches).

- Action it drives: If usage is low, repackage the asset, clarify when to use it, and coach managers to reinforce it.

Directional benchmark: If an asset is meant to support a core stage (like discovery or evaluation), it should show up consistently in active deals for that segment; if it’s mostly getting views but rarely being used in opportunities, it’s not embedded in the selling motion.

6. Usage by stage (right asset, right moment)

- What it tells you: Whether the asset is being used at the stage it was designed for.

- How to calculate: Stage-specific deals where the asset was used ÷ total deals in that stage.

- Where the data comes from: CRM stage data plus asset usage tracking (content platform, link tracking).

- How to segment: Stage, segment, deal type (new vs expansion), and rep tenure.

- Cadence: Monthly.

- Action it drives: If it’s used in the wrong stage, fix naming, placement, and “when to use” guidance.

Directional benchmark: A strong signal occurs when the asset is used as intended. If it’s often used too early, too late, or spread across stages, your guidance is unclear. This mismatch can show that the asset's purpose doesn’t align with reality.

7. Micro influence on deal progression (targeted stage movement)

- What it tells you: Whether the asset is associated with better movement at the stage it’s meant to impact (directional, not full attribution).

- How to calculate: Progressed deals with asset use ÷ deals with asset use, compared to progressed deals without asset use ÷ deals without asset use (same segment/stage).

- Where the data comes from: CRM stage movement timestamps plus asset usage logs.

- How to segment: Segment, stage, Annual Contract Value, region, rep tenure.

- Cadence: Monthly or quarterly (needs enough volume).

- Action it drives: If there’s no lift, rewrite/replace the asset or tighten guidance on how it’s used in the conversation.

Directional benchmark: A useful asset shows clear differences in stage movement or time-in-stage compared to similar deals. If there’s no visible change after a few cycles, the asset might be “nice to share” but isn't actually moving the deal.

8. Manager content usage during coaching (reinforcement)

- What it tells you: Whether managers are reinforcing the asset in 1:1s and deal coaching (a strong predictor of rep adoption).

- How to calculate: Coaching sessions where asset was referenced ÷ total coaching sessions.

- Where the data comes from: Coaching notes, call review templates, and manager enablement tools.

- How to segment: Manager, team, segment, and rep tenure.

- Cadence: Monthly.

- Action it drives: If manager usage is low, equip managers with a coaching script and make the asset part of the weekly coaching rhythm.

Directional benchmark: When managers mention an asset in coaching, rep usage often increases. But if managers don’t discuss it in deal reviews or 1:1s, it won’t be a standard part of the team’s sales approach.

C. Skill & Readiness Metrics

9. Roleplay performance

- What it tells you: Whether reps can execute key skills in a controlled setting before they’re judged in live deals.

- How to calculate: Average roleplay score this period − average roleplay score last period (or baseline).

- Where the data comes from: Roleplay scorecards, certification rubrics, and L&D assessments.

- How to segment: Skill (discovery, objections, closing), rep tenure, team, manager.

- Cadence: Weekly for new hires, monthly for tenured teams.

- Action it drives: If scores stall, increase practice reps and tighten the rubric; if only a few reps improve, coach the middle.

Directional benchmark: Roleplay scores should rise with repeated core scenarios. If scores level off or fluctuate, reps need more practice. Also, the rubric must stay consistent among reviewers.

10. Call quality indicators

- What it tells you: How well reps execute in real calls (the “in the wild” view).

- How to calculate: Average call quality score this period − average call quality score last period (by skill area).

- Where the data comes from: Call recordings, call scoring rubrics, and conversation intelligence tools.

- How to segment: Stage (discovery vs. evaluation), segment, tenure, manager, and persona.

- Cadence: Weekly for managers, monthly for enablement trends.

- Action it drives: If quality drops in one stage, run a targeted coaching sprint and refresh the talk track for that stage.

Directional benchmark: Call quality should first improve in the stage you trained (like discovery) and then spread. If scores drop in one stage or vary greatly by manager, it shows uneven coaching or unclear messaging.

11. Pre/post skill improvement (after a program)

- What it tells you: Whether a specific training or coaching initiative actually improved capability.

- How to calculate: Average skill score after program − average skill score before program (same reps, same rubric).

- Where the data comes from: Pre/post assessments, roleplays, call scoring, and manager scorecards.

- How to segment: Program cohort, tenure, segment, manager.

- Cadence: After 2–4 weeks post-rollout (then monthly check).

- Action it drives: If there’s no lift, the program isn’t landing; adjust the content, practice format, or manager reinforcement.

Directional benchmark: You should see a clear lift within a few weeks after rollout if the program is practical and reinforced; if scores barely move, the issue is usually lack of practice, weak reinforcement, or the training not matching real call situations.

12. Execution consistency across reps

- What it tells you: Whether good execution is spreading beyond top reps (consistency is what scales).

- How to calculate: Percentage of reps meeting the execution standard this period − percentage meeting it last period.

- Where the data comes from: Call scoring, roleplay rubrics, manager scorecards, and deal reviews.

- How to segment: Team, manager, tenure, and performance band.

- Cadence: Monthly.

- Action it drives: If consistency is low, standardize coaching with a shared rubric and focus on the one skill causing the most variance.

Directional benchmark: Over time, the gap between your top reps and the middle should close on the basics. If only top reps meet the standard while the middle stays flat, you have a scaling problem, not a content problem.

D. Coaching Metrics

13. Coaching frequency

- What it tells you: How regularly coaching is happening (if frequency drops, execution usually drifts).

- How to calculate: Total coaching sessions completed ÷ total reps (per week or month).

- Where the data comes from: Manager 1:1 logs, coaching tools, and calendar/CRM notes (if standardized).

- How to segment: Manager, team, rep tenure, and performance band.

- Cadence: Weekly.

- Action it drives: If frequency is low, set a minimum coaching rhythm and make it part of the manager's operating cadence.

Directional benchmark: Coaching must be regular, not just when it’s convenient. If coaching stops during busy weeks and doesn’t pick back up, execution will suffer. This means rollouts won’t have a positive effect.

14. Coaching coverage

- What it tells you: Whether coaching is reaching the whole team, not just a few reps.

- How to calculate: Reps coached at least once in the last period ÷ total reps.

- Where the data comes from: Coaching logs, 1:1 templates, and call review records.

- How to segment: Manager, team, tenure, ramping vs tenured.

- Cadence: Weekly or biweekly.

- Action it drives: If coverage is uneven, standardize who gets coached and when (especially new and mid performers).

Directional benchmark: Coaching should involve the entire team regularly. If only new or struggling reps receive coaching, performance gaps will grow, and the average performers will remain just that.

15. Coaching quality

- What it tells you: Whether coaching is skill-based and actionable or generic and forgettable.

- How to calculate: Coaching sessions meeting a quality standard ÷ total coaching sessions (based on a simple rubric).

- Where the data comes from: Coaching notes, call review scorecards, and manager checklists.

- How to segment: Manager, team, skill area (discovery, objections, closing).

- Cadence: Monthly.

- Action it drives: If quality is low, introduce a shared coaching rubric and train managers on how to use it.

Directional benchmark: Good coaching is tied to a specific skill, a real example, and a clear next step; if most coaching sounds like general advice, it won’t change behavior.

16. Reinforcement behavior

- What it tells you: Whether coaching drives habit change (repeated reinforcement is what makes behaviors stick).

- How to calculate: Reps coached on the same priority skill at least twice ÷ reps coached (in the period).

- Where the data comes from: Coaching plans, recurring coaching themes in notes, and scorecards.

- How to segment: Manager, tenure, priority skill, team.

- Cadence: Monthly.

- Action it drives: If reinforcement is low, run time-boxed coaching sprints focused on one skill and track follow-ups.

Directional benchmark: Changing behavior often requires practice over several weeks. If managers don’t revisit the same skill often, improvements will be short-lived and uneven.

Common Mistakes Teams Make When Tracking Enablement Metrics

After you track the right metrics, the next challenge is to avoid traps. These traps can make enablement reporting messy, biased, or ignored. Most teams don’t fail because they “don’t have data.” They fail for several reasons: they track the wrong things, track too much, or don’t turn metrics into useful actions for managers.

1. Tracking too many metrics

If you track everything, you’ll spend more time reporting than improving, and nobody knows what to focus on.

What it looks like

- Dozens of metrics across every tool

- No one agrees what matters most

- Teams spend more time reporting than improving

What's the fix: Choose a few metrics for each category: adoption, content, skill, coaching, and early-stage signals. Focus only on those that help answer, “What should we coach or change next?”

2. Confusing activity with impact

If you celebrate completions and attendance, you’ll miss the real question: did anything change in how reps sell?

What it looks like

- Training attendance becomes the headline

- Content views are treated like influence

- “We delivered it” replaces “Did it change execution?”

What's the fix: Treat activity metrics as supporting signals only. The real measures are application and execution: talk tracks used in calls, content used in live deals, coaching reinforcement, and early-stage progression.

3. Misalignment with RevOps on definitions

If Enablement and RevOps use different metrics, alignment won’t happen. Instead, you’ll have meetings arguing over whose dashboard is correct.

What it looks like

- Two dashboards show two different numbers for the same thing

- Stage conversion arguments in forecast calls

- Trust in reporting drops

What's the fix: Lock definitions with RevOps once. Clarify what counts as stage entry, what qualifies as an opportunity, what “usage” means, and which time windows apply. One source of truth, one logic, no shadow dashboards.

4. No context for frontline managers

If managers don’t know what a metric means for this week’s coaching, the metric won’t change anything.

What it looks like

- Managers say “Interesting” and keep coaching from gut feel

- Metrics are reviewed but don’t change 1:1s or call reviews

- Enablement owns the dashboard, but behavior doesn’t change

What's the fix: Translate metrics into coaching prompts. If a signal drops, the manager should immediately know:

- what skill to coach,

- what call segment to review,

- what “good” looks like,

- what to reinforce next week.

5. Ignoring call insights and qualitative signals

If you only look at dashboards, you’ll see the problem late, and you still won’t know what reps are saying (or skipping) on calls.

What it looks like

- Conversion drops but you can’t explain it

- Talk-track usage looks “fine,” but calls are messy

- Coaching feels random because there’s no evidence

What's the fix: Pair quantitative metrics with lightweight qualitative signals: call review themes, common objections, weak next steps, and messaging misses. You don’t need a perfect system, just enough signal to explain patterns.

6. Not mapping metrics to specific programs or behaviors

If you don’t connect metrics to specific rollouts and behaviors, you’ll report numbers without knowing what caused them to move.

What it looks like

- “Here are the metrics,” with no link to what changed

- Programs launch, but measurement doesn’t change

- Leadership asks, “So what did enablement actually do?”

What's the fix:

For every enablement initiative, define up front:

- the behavior you expect to change,

- the metric that proves it,

- the early pipeline signal you expect to improve,

- and the review window.

That’s how you keep enablement measurement useful: fewer metrics, clearer definitions, manager-ready context, and a direct line from enablement work to execution changes.

Conclusion: Metrics-Led Enablement Is the New Standard

If there’s one takeaway here, it’s this: enablement metrics help you run the team with your eyes open. They give you signals early, before the quarter is already gone, so you can see what’s landing in the field, what’s not working, and where deals are starting to slow down.

When the right metrics are in place, coaching gets more specific, execution gets more consistent, and course correction gets faster because you’re fixing the real issue (message, process, or coaching), not adding more activity. It also makes the org easier to run: when Enablement, RevOps, and Sales Leadership share definitions and review the same signals on the same cadence, meetings move from debating dashboards to deciding what to change next.

Outdoo helps teams put this into practice by standardizing skill and coaching signals and turning them into clear actions for managers. If you want to see how it fits your workflow, request a demo.

Frequently Asked Questions

Sales enablement KPIs are high-priority outcome measures that quantify the contribution of sales enablement to strategic sales and revenue objectives. Examples include win rate, pipeline coverage, conversion rates between stages, deal velocity, average deal size, quota attainment, and forecast accuracy.

However, it emphasizes the importance of tracking sales enablement metrics to assess how enablement efforts are impacting sales outcomes.

The future of sales enablement is focused on measuring outcomes rather than just activities. As enablement becomes more integral to sales processes, metrics will play a critical role in evaluating effectiveness and driving improvements.

Sales enablement effectiveness can be measured through various metrics that track adoption, behavior change, skill improvement, execution quality, and early deal movement signals. These metrics provide insights into whether enablement initiatives are translating into improved sales performance.

Outdoo enhances sales enablement by providing a comprehensive AI-driven platform that integrates roleplay practice, live customer interactions, and post-call assessments. This seamless connection allows enterprise teams to practice realistic scenarios, apply their skills in real-time, and receive structured feedback that validates behavioral changes. With adaptive AI simulations that reflect actual buyer behavior, Outdoo ensures that coaching translates into measurable performance improvements, empowering organizations to close the loop between training and execution effectively.

.svg)

.webp)

.webp)